-

Notifications

You must be signed in to change notification settings - Fork 12

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Readable stream from chunked transfer encoding seems to miss some chunks #20

Comments

|

Without seeing any code, there's very little I can do to investigate. Is there a way for me to reproduce your issue on my end? A repository I can clone, or a code snippet I can run? Does the issue still occur if you fetch the entire response as an use wasm_bindgen::{prelude::*, JsCast};

use wasm_bindgen_futures::JsFuture;

use web_sys::{console, window, Response};

use js_sys::Uint8Array;

use std::io::Cursor;

use futures_util::io::{AsyncRead, AsyncReadExt};

async fn main() -> Result<(), Box<dyn std::error::Error>> {

// Make a fetch request

let url = "https://rustwasm.github.io/assets/wasm-ferris.png";

let window = window().unwrap_throw();

let response_value = JsFuture::from(window.fetch_with_str(url)).await?;

let response: Response = response_value.dyn_into().unwrap_throw();

// Read the response into a JS ArrayBuffer

let buffer_value = JsFuture::from(response.array_buffer()?).await?;

// Copy the ArrayBuffer to a Rust Vec

let vec = Uint8Array::new(buffer_value).to_vec();

// Consume the Vec as an AsyncRead

let cursor = Cursor::new(&vec[..]);

let mut buf = [0u8; 100];

loop {

let n = cursor.read(&mut buf).await?;

if n == 0 {

break

}

let bytes = &buf[..n];

// Do something with bytes

}

}That way, we can figure out if the issue is really caused by |

|

Here is the code to convert the readablestream to the async reader: use wasm_streams::ReadableStream;

use js_sys::Uint8Array;

use web_sys::Response;

use web_sys::window;

use crate::renderable::image::Image;

use futures::TryStreamExt;

use futures::future::Either;

use web_sys::{Request, RequestInit, RequestMode, Headers};

let mut opts = RequestInit::new();

opts.method("GET");

//opts.mode(RequestMode::Cors);

let window = window().unwrap();

let request = Request::new_with_str_and_init(&url, &opts)?;

let resp_value = JsFuture::from(window.fetch_with_request(&request))

.await?;

let resp: Response = resp_value.dyn_into()?;

// Get the response's body as a JS ReadableStream

let raw_body = resp.body().unwrap();

let body = ReadableStream::from_raw(raw_body.dyn_into()?);

// Convert the JS ReadableStream to a Rust stream

let bytes_reader = match body.try_into_async_read() {

Ok(async_read) => Either::Left(async_read),

Err((_err, body)) => Either::Right(

body

.into_stream()

.map_ok(|js_value| js_value.dyn_into::<Uint8Array>().unwrap_throw().to_vec())

.map_err(|_js_error| std::io::Error::new(std::io::ErrorKind::Other, "failed to read"))

.into_async_read(),

),

};

let mut reader = BufReader::new(bytes_reader);

It even works with streaming without passing by the proxy. But when streaming from the proxy, it produces the above problem. Streaming from a local file (getting a File object and creating an url to fetch from it) produces the good result as well (2nd pic of my last post) |

This sounds like an issue with your proxy, rather than with I'm afraid I can't help you much further though. 🤷♂️ |

|

I suspect this is the "transfer-encoding: chunked" thing that may make the things go wrong because it seems I do not receive all the chunks, like one every two chunks. The url is to query through the proxy is: https://alasky.cds.unistra.fr/cgi/JSONProxy?url=https://rustwasm.github.io/assets/wasm-ferris.png It seems to be a streaming problem because it works fetching it using array buffer and it also simply work in the browser. By following the url, I can download the ferris image with no corruption in the bytes. That is why I opened this issue. |

|

It looks like the proxy only uses chunked transfer if the original response also uses chunked transfer. Which is not the case for the Ferris image... |

…ens/wasm-streams#20 is solved). Tell the user that fetching may fail because of CORS headers not set and that he can open its fits file by first manually download it and then open it in aladin lite

Hi @MattiasBuelens ,

We are passing by a proxy to get files that are coming from a server which does not have CORS headers. Requesting the file from our proxy have this headers:

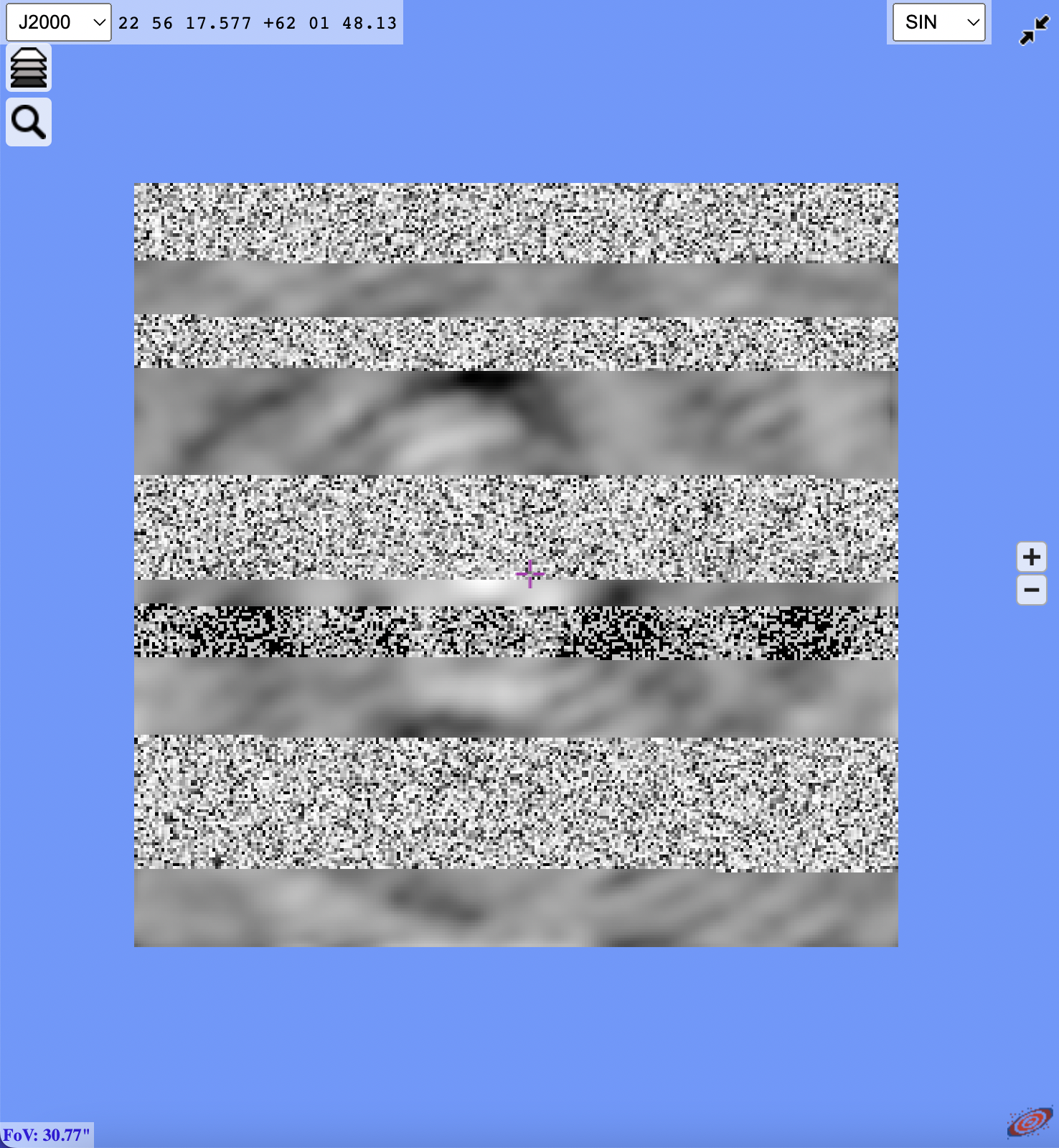

I see that the transfer-encoding is "chunked", maybe it is the reason why I am seeing that:

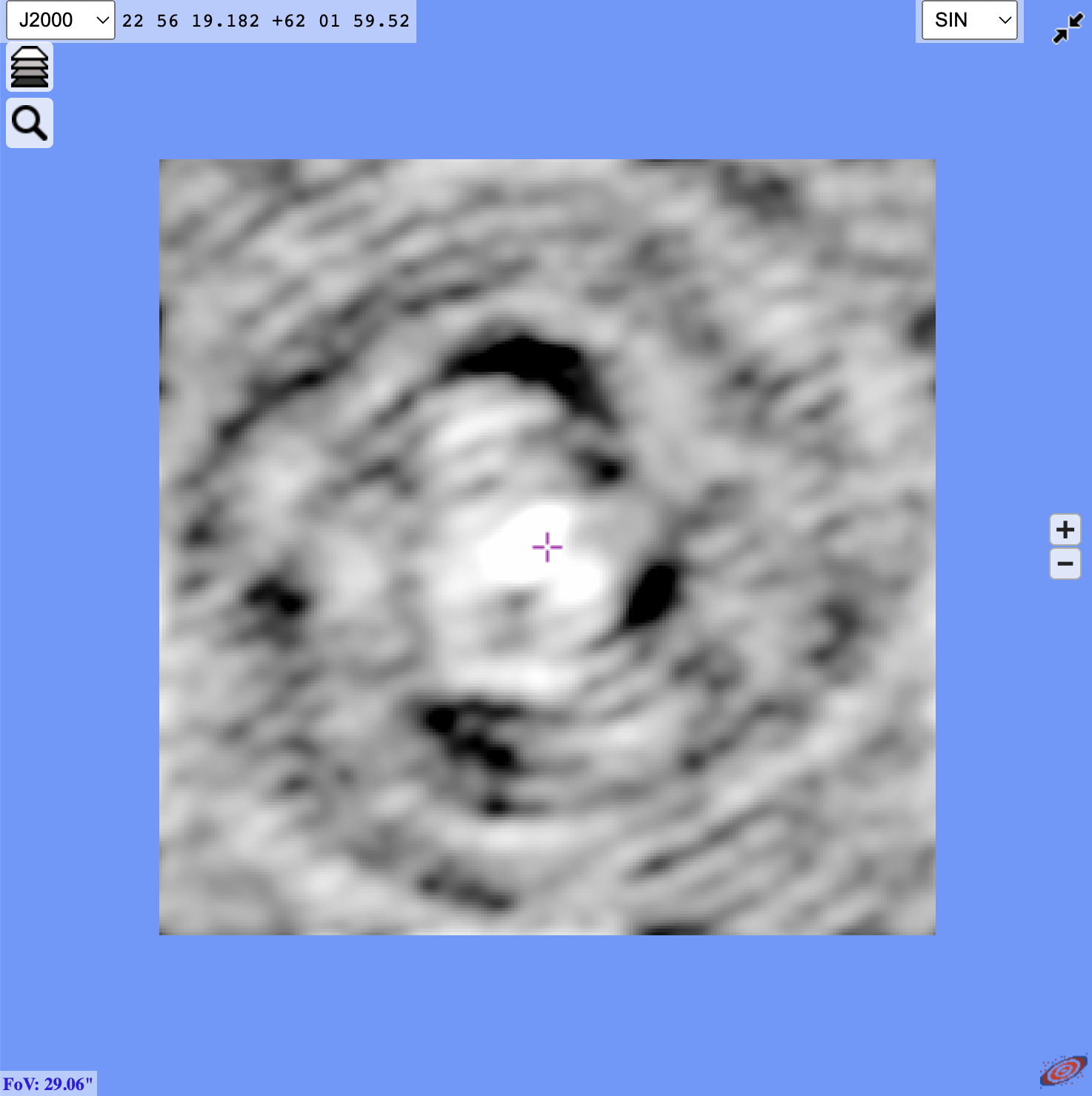

If I download the file manually and then drag and drop/open it in my application I get the correct image:

It is like some chunks are missing but other are correctly received and parsed.

The code used behind one or the other method is similar:

Thank you very much, maybe you have an idea on what may cause this issue.

The text was updated successfully, but these errors were encountered: